Alibaba Open-Source Model Z-Image: 6B Parameters Subvert The AI Image Generation Mode, Create Blockbuster Texture in Only 8 Steps

A professional-grade AI image generation model that can run with only 6B parameters and 8-step sampling has arrived.

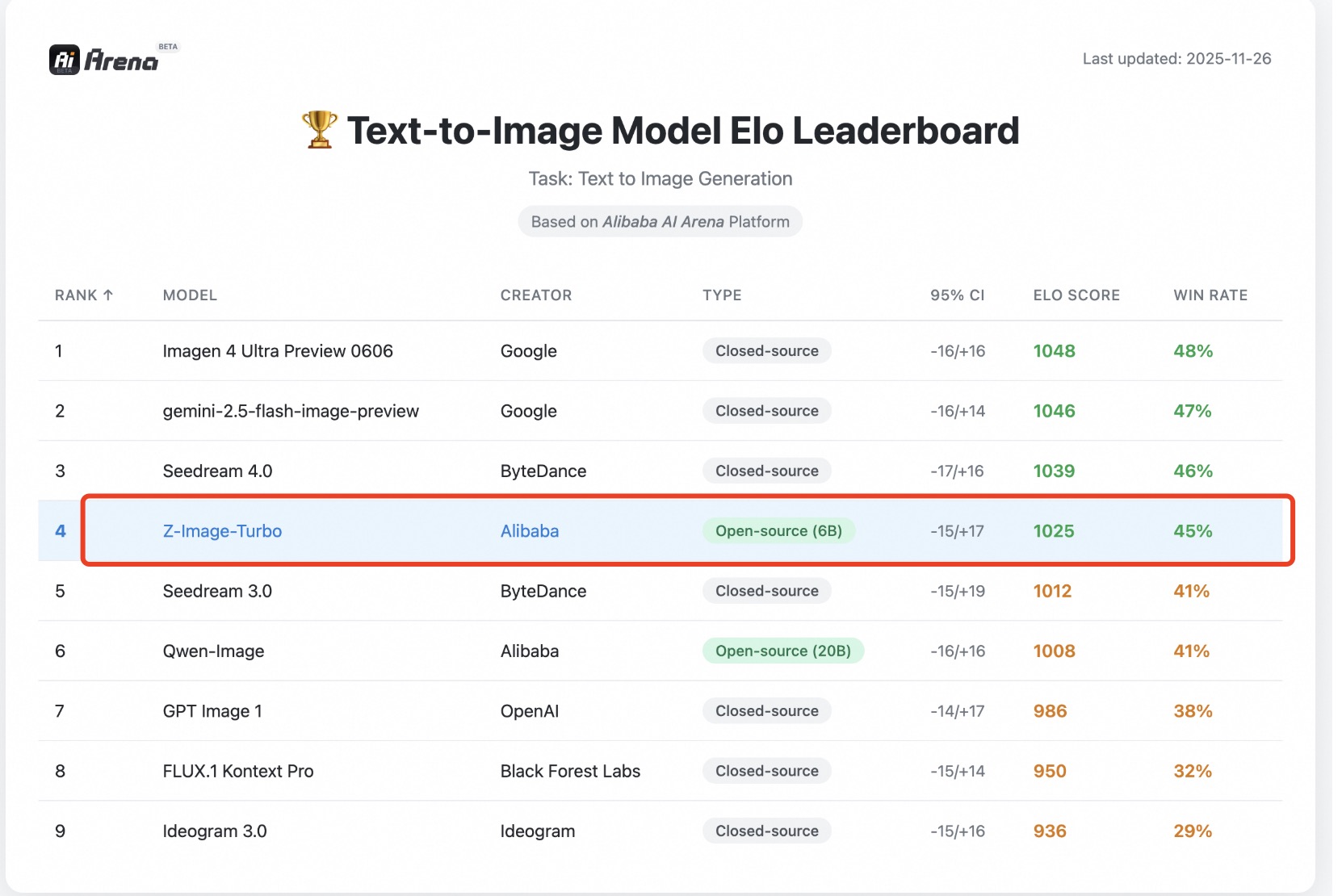

In the field of AI image generation, parameter scale has always seemed to be the golden standard for measuring model capabilities. Recently, a quietly ranked image model, Z-Image, the latest open-source model from Alibaba Tongyi Laboratory, is challenging this traditional concept.

This lightweight model has achieved visual effects close to 20B-level commercial models, with VRAM usage below 16GB, allowing consumer-grade graphics cards to smoothly run professional-level image generation tasks.

Low Usage, Great Power: Core Breakthroughs of Z-Image

On November 27th, Alibaba Tongyi Laboratory announced the open-source of the brand-new image generation model Z-Image, based on the single-stream DiT architecture, including three variants: Turbo, Base and Edit, which are optimized for efficient inference, basic generation and image editing respectively.

Amazingly, it can render a 1024×1024 image in only 2.3 seconds on RTX4090, with VRAM occupying only 13GB.

This means that ordinary developers can run this advanced image generation model with just an RTX 3060 graphics card, significantly lowering the threshold for AI creation.

Version | Parameter Count | Inference Steps | Positioning | Typical Scenarios |

Z-Image-Base

| 6.15B

| 50-100

| Open-source base

| Scientific research, secondary fine-tuning

|

Z-Image-Turbo

| 6.15B

| 8 | Production main force | posters, e-commerce, avatars, wallpapers

|

Z-Image-Edit

| 6.15B

| 8-12 | Image-to-image | local text modification, background replacement, styling |

Technological Innovation:The Art of Full-Link Redesign

Why can Z-Image with only 6B parameters match or even surpass 20B+ level large models in 8 network forward passes?

The answer lies in its full-link redesign of "data-architecture-training-inference" , turning "calculating less" into "calculating smarter"—with less redundancy, more accurate gradients, and shorter trajectories, compressing the diffusion model from 100 steps to 8 steps while maintaining high resolution, Chinese-English bilingual support, and commercial-grade texture.

The data engine is one of the key factors for Z-Image's success. Through the four-module pipeline design, Z-Image keeps "garbage data" out of training, saving 30% of computing power.

The Data Profiling Engine calculates more than 40-dimensional features for each image, and those below the threshold are directly discarded; the Cross-modal Vector Engine extracts image-text pair vectors using CN-CLIP, graph construction and deduplication on GPU, and can scan 1 billion images in 8 hours.

The S3-DiT single-stream architecture is another major innovation. Different from the traditional dual-stream architecture, S3-DiT directly splices text tokens, image VAE tokens and semantic tokens into a single sequence, and completes self-attention with the same QKV, increasing the parameter reuse rate by more than 30%.

Combined with 3D-RoPE positional encoding, the model can process text and image information more efficiently.The Decoupled-DMD technology splits the traditional DMD into two parts: CA is responsible for learning undetermined high-frequency details, while DM supervises the whole process to ensure that the global color and composition do not deviate from the track.

This decoupled design allows the model to generate high-quality images within 8 steps without color cast or detail loss.

Excellent Performance

In actual tests, Z-Image-Turbo has shown impressive performance. It not only generates images quickly, but also performs well in image quality and text rendering:

· Bilingual text rendering:

Z-Image performs extremely well in Chinese and English text rendering, and can accurately generate images containing Chinese and English characters.

· Detail fidelity:

The model shows high fidelity when processing faces, object and environmental details, and the texture of the generated images is close to professional photography.

· Prompt following:

Z-Image boasts powerful capability in comprehending complex commands, and can accurately understand and generate corresponding images even with ultra-long nested Chinese descriptions.

Practical Applications: From E-commerce to Creative Design

The practicality of Z-Image is fully embodied in its multi-scenario applicability:

· E-commerce poster design:

Z-Image can quickly generate high-quality commercial posters, and is very good at handling the demand for Chinese and English mixed typesetting. For example, input "vertical cosmetic poster, main title 'Cherry Blossom Season Limited' in golden handwritten font, subtitle 'Spring Limited' in white thin line font, light pink gradient background, a bunch of cherry blossoms in the center", the model can quickly create print-level quality poster design in a few seconds.

· Image editing and optimization:

The Z-Image-Edit tool allows users to modify original images through natural language. For example, just input "change the background to the Sydney Opera House, modify the text on the held sign to 'Z-Image', delete the backpack", and the model can intelligently complete these modifications.

· Multilingual cultural design:

The model's understanding of different cultural elements is also very surprising. When inputting descriptions themed on Indian Diwali, including Hindi text and traditional cultural elements, the generated images can accurately reflect the specific cultural background.

Easy Deployment: A Boon for Ordinary Developers

Unlike many large models that require professional-grade hardware, Z-Image has an extremely low deployment threshold. The model has been open-sourced on GitHub, Hugging Face and ModelScope, adopting the Apache 2.0 license, which means individual developers and even enterprises can use and modify it freely.

[For users who wants to experience the model's capabilities, they can directly try it online through ArtAny AI]

Future Outlook: A New Milestone in the Open-Source Ecosystem

The release of Z-Image marks the accelerated evolution of AI image generation technology towards universal applications. Alibaba Tongyi Laboratory stated that it will continue to release complete, undistilled basic models, opening the door for community-driven fine-tuning, customized workflows and the development of a wider open-source ecosystem.

Today, as AI technology has increasingly become a core tool for productivity and creativity, Z-Image, with its lightweight employment, excellent performance and extremely low usage threshold, is expected to become an important catalyst for promoting the popularization of AI image technology.

Whether it is professional creators improving productivity or developers building innovative applications, this open-source model with both performance, efficiency and ease of use is constantly injecting vitality into the AI visual creation ecosystem, opening up new possibilities for universal creative expression.